Tech has got to get a code of ethics, ffs.

I keep wondering what we can do to ensure we’re building a better world with the products we make and if we need a code of ethics. So often I see people fall through the cracks because they’re “edge cases” or “not the target audience.” But at the end of the day, we’re still talking about humans.

Other professions that can deeply alter someone’s life have a code of ethics and conduct they must adhere to. Take physicians’ Hippocratic oath for example. It’s got some great guiding principles, which we’ll get into below. Violating this code can mean fines or the losing the ability to practice medicine.

We also build things that can deeply alter someone’s life, so why shouldn’t we have one in tech?

While this isn’t a new subject by any stretch, I made a mini one of my own. It’s been boiled down to just one thing and is flexible enough to guide all my other decisions. I even modified it from the Hippocratic Oath:

We will prevent harm whenever possible, as prevention is preferable to mitigation. We will also take into account not just the potential for harm, but the harm’s impact.

That’s meaningful to me because our for years, tech had a “move fast, break things” mentality, which screams immaturity. It’s caused carelessness, metrics-obsessed growth, and worse— I don’t need to belabor that here.

We’ve moved fast for long enough, now let’s grow together to be more intentional about both what and how we build. Maybe the new mantra could be “move thoughtfully and prevent harm,” but maybe that isn’t quite as catchy.

A practical example #

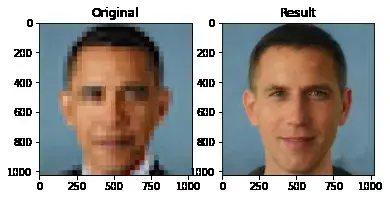

Recently, a developer launched a de-pixelizer. Essentially, it can take a pixelated image and approximate what the person’s face might look like. The results were…not great.

Setting aside that the AI seems to have been only trained on white faces, we have to consider how process this might go wrong and harm people.

Imagine that this algorithm makes it into the hands of law enforcement, who mistakenly identifies someone as a criminal. This mistake could potentially ruin someone’s life, so we have to tread very carefully here.

Even if the AI achieves 90% accuracy, there’s still a 10% chance it could be wrong.

And while the potential for false positives might be relatively low, the impact of those mistakes could have severe consequences. Remember, we aren’t talking about which version of Internet Explorer we should support, we’re talking about someone’s life — we have to be more granular because both the potential and impact of harm is high here.

Accident Theory and Preventing Harm #

(Fractal’s Director of Product Management) has this to say about creating products ethically:

“When you create something, you also create the ‘accident’ of it. For example, when cars were invented, everyone was excited about the positive impact of getting places faster, but it also created the negative impact of car crashes and injuries.

How do you evaluate the upside of a new invention along with the possible negative consequences, especially when they have never happened before? So when you’re creating new technology, I believe you have a responsibility, to the best of your ability, to think through the negative, unintended, and problematic uses of that technology, and to weigh it against the good it can do. It become particularly challenging when that technology also has the potential be extremely profitable, but is even more important in those cases.”

If you’re looking for an exercise you can do, try my Black Mirror Brainstorm.

In closing #

In this post, we discussed why we need a code of ethics in our world. I shared one thing that I added to mine. We also talked about the impact of not having one. You also learned about Accident Theory and have a shiny new exercise to try.

I’m curious about one thing: What’s one thing you’d include if you made a Hippocratic Oath for tech?

Thanks for reading.